-

Manufacturers

- ABB Advant OCS and Advant-800xA

- ABB Bailey

- ABB Drives

- ABB H&B Contronic

- ABB H&B Freelance 2000

- ABB Master

- ABB MOD 300, MOD 30ML & MODCELL

- ABB Procontic

- ABB Procontrol

- ABB Synpol D

- Allen-Bradley SLC 500

- Allen-Bradley PLC-5

- Allen-Bradley ControlLogix

- Allen-Bradley CompactLogix

- Allen-Bradley MicroLogix

- Allen-Bradley PanelView

- Allen-Bradley Kinetix Servo Drive

- Allen-Bradley PowerFlex

- Allen-Bradley Smart Speed Controllers

- 3300 System

- 3500 System

- 3300 XL 8mm Proximity Transducer

- 3300 XL NSV Proximity Transducer

- 990 and 991 Transmitter

- 31000 and 32000 Proximity Probe Housing Assemblie

- 21000, 24701, and 164818 ProbeHousing Assemblies

- 330500 and 330525 Piezo-Velocity Sensor

- 7200 Proximity Transducer Systems

- 177230 Seismic Transmitter

- TK-3 Proximity System

- GE 90-70 Series PLC

- GE PACSystems RX7i

- GE PACSystems RX3i

- GE QuickPanel

- GE VersaMax

- GE Genius I/O

- GE Mark VIe

- GE Series One

- GE Multilin

- 800 Series I/O

- Modicon 984

- Modicon Premium

- Modicon Micro

- Modicon Quantum

- Telemecanique TSX Compact

- Altivar Process

- Categories

- Service

- News

- Contact us

-

Please try to be as accurate as possible with your search.

-

We can quote you on 1000s of specialist parts, even if they are not listed on our website.

-

We can't find any results for “”.

-

-

Get Parts Quote

Quality Standards for Tested Automation Equipment

This is my linkedin:

As a seasoned expert in the field of automation spare parts, Sandy has dedicated 15 years to Amikon, an industry-leading company, where she currently serves as Director. With profound product expertise and exceptional sales strategies, she has not only driven the company's continuous expansion in global markets but also established an extensive international client network.

Throughout Amikon's twenty-year journey, Sandy's sharp industry insights and outstanding leadership have made her a central force behind the company's global growth and sustained performance. Committed to delivering high-value solutions, she stands as a key figure bridging technology and markets while empowering industry progress.

Why Quality Standards Matter More Than Ever

If you build or operate industrial automation and control hardware, you already know the stakes. A missed defect in a PLC panel, a miswired I/O block, or a flaky vision system does not just cause annoyance. It can scrap a batch, stop a line, or in the worst cases create a safety incident.

Across industries, the cost of poor quality has been documented in painful detail. Analysis cited by Sam-Solutions points to U.S. companies losing over $2.08 trillion in a single year due to poor-quality software. Global App Testing references a similar multi-trillion-dollar impact and notes that more than a third of smartphone users will uninstall an app after encountering bugs. In manufacturing, MachineMetrics emphasizes that poor quality translates directly into scrap, rework, returns, and warranty costs.

Those numbers are mostly from software and service environments, but the lesson applies directly to automation equipment. Every modern piece of industrial hardware is a cyber-physical system. The quality of the equipment, the embedded software, and the automated tests that sign them off are inseparable. When your test strategy is weak, you do not just risk a software glitch; you risk a stopped conveyor, a damaged robot, or a missed defect that escapes into a customer’s plant.

As a systems integrator, I have learned that “tested” means very little unless you can point to concrete standards, repeatable processes, and trustworthy data. The rest of this article is about what those standards look like in practice and how to apply them to automation equipment without turning your project into an academic exercise.

QA vs QC: Getting The Foundations Right

Before talking about test benches, cameras, and AI, it is worth aligning on terminology. Many teams use “QA” and “QC” interchangeably; the better-performing plants do not.

According to ISO 9000, summarized by Sam-Solutions, quality assurance is the part of quality management focused on providing confidence that quality requirements will be fulfilled. It is proactive and process-oriented. Quality control is the part focused on fulfilling quality requirements. It is reactive and product-oriented, typically embodied in inspections and tests.

Translated to automation equipment:

Quality assurance for automation equipment is everything you do to design quality into your panels, machines, and control systems. That includes your internal standards, design reviews, coding guidelines for PLC and HMI logic, documented test strategies, and the way you manage changes.

Quality control is what you do to verify that a specific build meets those standards. That shows up as incoming inspections on components, factory acceptance tests (FAT), site acceptance tests (SAT), end-of-line checks, and in some cases, automated quality control cells on the production line.

ProjectManager describes two principles that are especially relevant in this context. “Fit for purpose” means your delivered automation does what the customer actually needs in their environment. “Right first time” means defects are identified and addressed as early as possible, rather than waiting for a commissioning crisis.

If you remember nothing else, remember this: QA is the system; QC is the checkpoint. High-quality automation equipment needs both to be robust.

Manual Inspection, Automated QC, And AI On The Line

Most plants still rely heavily on manual inspection and informal tests. There is usually an experienced technician who “knows when a drive sounds wrong” or can “tell by looking” whether a control panel is built correctly. That experience is valuable, but it does not scale.

Tupl’s work on smart factories highlights one of the fundamental problems with manual visual inspection. In high-volume environments, human inspectors often achieve under 85% accuracy, especially as fatigue sets in and pressure for throughput increases. In contrast, the AI-based visual inspection toolkit they describe reports detection accuracy around 99% with real-time predictions in under a second, and up to 90% reduction in manual effort needed to configure models and label data.

OnLogic describes automated quality control as the use of integrated hardware and software, particularly industrial computers, sensors, and machine vision, to perform inspections faster and more consistently than humans can. They emphasize several benefits that any automation engineer will recognize immediately: reduced human error, lower rework and returns, 24/7 inspection capability, more uniform quality, and better compliance and traceability.

In practical plant terms, that means moving from a technician checking one unit in ten to a system that checks every unit, on every shift, in real time. For automation equipment, that might be:

- A vision system verifying that control panel wiring, markers, and terminal layouts match the standard.

- An automated functional test rack that energizes IO, simulates field devices, and validates logic before a panel ever leaves the shop.

- A machine-mounted industrial PC capturing torque curves, vibration patterns, or cycle times as part of a first-pass quality gate.

The takeaway is not that humans go away. Sutherland’s guidance on QA automation is clear that there are strengths and weaknesses to both manual and automated testing. Manual tests are still the best way to evaluate usability, odd edge cases, and overall “feel.” Automated tests excel at high-frequency, repetitive checks where consistency matters more than creativity.

For industrial automation, the quality standard you are aiming for is a balanced approach: humans focus on the problems that require judgment, while machines quietly and relentlessly enforce your baseline.

Manual vs Automated Quality Control In Automation Equipment

A concise way to think about the trade-offs is summarized in the following table, derived from Sutherland, Tupl, and OnLogic:

| Aspect | Manual QC on Equipment | Automated or AI-Augmented QC |

|---|---|---|

| Execution speed | Limited to human pace and shifts | Continuous, high-speed, parallel checks |

| Accuracy and consistency | Varies with fatigue, training, and pressure | Highly consistent; AI visual systems have reported ~99% detection |

| Best suited for | Exploratory checks, usability, “weird” cases | Regression-like checks, dimensional and visual conformity |

| Labor and scalability | Labor-intensive; difficult to scale to every unit | High setup cost but low marginal cost; scales across units/lines |

| Data and traceability | Often minimal, unstructured notes | Structured logs, images, metrics, and historical trends |

A robust quality standard for tested automation equipment will deliberately specify where you expect each approach to be used, and what evidence you expect each to produce.

Frameworks And Standards That Actually Work In Plants

A lot of QA language comes from software and general manufacturing, but the principles adapt well to automation hardware.

Sam-Solutions outlines a structured quality control process that maps neatly onto testing automation equipment:

First, requirements analysis. In our world, that means crisp specifications for throughput, cycle time, safety functions, environmental conditions, and integration points. Vague requirements create untestable systems.

Second, test strategy. This defined approach describes what will be tested, with what tools, and at what stage. For automation equipment, that might differentiate between panel-level tests, machine-level tests, and integrated system tests.

Third, test planning. This is the practical blueprint: who executes which tests, on what hardware or simulators, with what data, and under what conditions. It includes risk analysis and contingency plans.

Fourth, test design. Here you translate requirements into specific test cases and conditions. For automation equipment, that includes sequences such as power-on behavior, interlock testing, failure mode simulations, and recovery from faults.

Fifth, test execution. This is where manual and automated methods come together on actual hardware, with results logged and defects tracked.

Finally, reporting. That means summarizing executed tests, pass and fail outcomes, and defect statistics in a way that supports a go or no-go decision.

From the manufacturing side, MachineMetrics recommends aligning with ISO 9000 style quality systems, standard operating procedures, and documented formulas for production. For automation equipment, this translates into well-defined build standards, version-controlled control logic, and documented FAT/SAT procedures.

ProjectManager stresses that a QA program should be backed by measurable objectives, quality planning, monitoring, and audits. For integrators and OEMs, this might mean internal audits of test coverage and defect escape rates for delivered equipment, not just process audits on paper.

The important point is that none of these standards say “trust your gut and hope for the best.” They all assume you will be explicit about how you test, what you test, and what you consider acceptable.

Building A Practical Test Strategy For Automation Equipment

A test strategy for automation equipment should be risk-driven and grounded in how the equipment will actually be used. Several sources focused on test automation strategy, including TestRail and Qualitest, make the same core points.

You start by defining scope and objectives. In an automated packaging line, for example, you might state that your test strategy must ensure safety system effectiveness, verify that design throughput is achievable with representative product, confirm that all integrations to higher-level systems work reliably, and prove that the system can recover gracefully from defined fault conditions.

Next, you prioritize what to test and, crucially, what to automate. Sauce Labs, SmartBear, and other testing experts recommend focusing automation on repetitive, high-risk, and high-business-value scenarios. For industrial equipment, that typically means:

Repeated safety sequences such as emergency stop, light curtain trips, and guard door interlocks that must never regress.

Core production sequences such as start, stop, changeover, and recipe management that will run thousands of times.

Interfaces such as fieldbus communications, alarms, and historian tags that need to be consistent across releases and variants.

TestingXperts and others emphasize that not everything should be automated. One-off or highly volatile scenarios, such as early-stage prototypes or highly customized operator interactions, may be better served with manual tests, at least initially.

You also need to decide how you will manage test data and environments. BrowserStack and TestRail both point out that test environments should be as close to production as practical and that test data should be realistic yet safe. In automation equipment, that means:

Using real or representative loads, not just empty conveyors or fixtures.

Simulating upstream and downstream systems where the real ones are unavailable or impractical to connect.

Ensuring that fault conditions are tested in a safe, controlled manner, often with simulation or temporary safety measures.

Finally, a strategy is only useful if it can be executed repeatedly. That is where test automation frameworks and continuous integration ideas, borrowed from software, start to matter for PLC and control logic development.

Designing Reliable Automated Tests And Test Rigs

A growing share of automation equipment quality rests on automated tests themselves: vision checks, functional test stands, and software test harnesses for control logic.

Software testing experts such as SmartBear, BrowserStack, and Sutherland offer consistent advice on how to design these automated tests so they are an asset rather than a maintenance burden.

Tests should be small, focused, and reusable. Instead of a single monolithic test script that exercises every function of a machine, you design smaller tests that each validate one behavior: a homing sequence, a safety function, a recipe download, or a particular alarm. In code, this aligns with the single responsibility principle; in hardware, it keeps your test racks and simulators modular.

Common functionality belongs in shared components, not duplicated across scripts. BrowserStack illustrates how duplicated setup code leads to fragile tests that are tedious to update when something changes. In the automation world, that might be a shared “power-up and reset to known state” routine for a machine, reused across many functional tests.

Test data belongs outside your test logic. SmartBear and Sauce Labs both emphasize data-driven testing, where input values live in external files or data stores. On a test stand for automation hardware, this might mean storing sets of recipes, fault conditions, or drive parameters separately so the same test logic can exercise many scenarios.

Environment realism is non-negotiable. Multiple sources, from Sam-Solutions to TestGrid, highlight that tests are only as good as the environment they run in. For automation equipment, this means that your simulation of field devices and upstream or downstream systems needs to mimic not only normal behavior but noise, delays, and failure patterns as well.

Logging and reporting must be detailed and actionable. SGBI emphasizes enhanced logging and concise, actionable summaries for root-cause analysis. Your industrial test stand should capture not just “pass” or “fail,” but timestamps, I/O states, sensor readings, and images where appropriate.

Finally, version control is essential. SGBI notes how using version control for test assets enables change tracking and stable, auditable automation suites. For automation equipment, that principle should extend to PLC programs, HMI projects, test stand code, and configuration of vision systems and AI inspection models.

Manual vs Automated Testing Roles Around Equipment

Here it is useful to echo a distinction Sutherland and Sam-Solutions make between manual and automated testing roles, adjusted for industrial contexts:

Manual testing remains vital for commissioning, operator usability assessments, and exploratory troubleshooting when a system behaves oddly. It leverages the domain knowledge of operators, maintenance, and commissioning engineers.

Automated testing is ideal for repeated regression checks of control logic after changes, load and performance tests on equipment, and high-volume inspection tasks on the line. It allows you to rerun comprehensive checks overnight or between shifts without tying up your best technicians.

A mature quality standard for automation equipment will explicitly define what you expect from each and how they interact.

Making The Test Systems Themselves Trustworthy

The irony of test automation is that the more you rely on it, the more you need to worry about its quality. SGBI’s work on test reliability, along with insights from TestingXperts and Qualitest, is instructive here.

They describe automation test reliability as the ability to produce consistent, accurate, and maintainable test outcomes even as the underlying application changes. In our world, that means:

Exception handling in test code so a single misbehaving sensor or communication glitch does not crash the entire test run.

Self-healing capabilities in UI or vision-based tests so minor changes in screen layout or lighting do not invalidate the test, an idea highlighted in both SGBI and modern AI-powered QA tooling.

Stable and well-managed test data to avoid flaky tests. SGBI recommends data created specifically for tests, cleaned up after use, and managed in centralized repositories.

Robust logging and reporting so that when a test fails, you can quickly see whether the issue is with the equipment, the production process, or the test apparatus.

Version control and disciplined change management, so you know exactly when a test or configuration changed and can correlate that with observed behavior.

These considerations are not academic. When an automated test stand misbehaves in a plant, it is often treated as an annoyance or bypassed. Once that happens, your quality standard collapses, and you are back to tribal knowledge. Building reliability into the test systems themselves is therefore part of your overall equipment quality standard, not a nice-to-have.

Measuring What Matters: KPIs And ROI

Quality standards that do not connect to measurable outcomes rarely survive the first schedule crunch. The good news is that you can measure the impact of higher-quality testing and automated QC on automation equipment in hard numbers.

MachineMetrics recommends several QA-focused KPIs in manufacturing that translate well to tested automation equipment:

Specification compliance, meaning the percentage of delivered panels, machines, or systems that meet all documented acceptance criteria without deviation.

First pass yield, the proportion of units that pass all tests without rework. High first pass yield on control panels or module assemblies is a direct indicator of effective upstream design and QA.

Scrap and rework cost associated with automation-related defects. As QA automation experts such as Qarma and others note, automation can reduce human error and expand regression coverage, which should drive these costs down over time.

On-time shipment and delivery performance, because robust test processes reduce last-minute surprises that delay delivery or commissioning.

Response time to quality issues, especially when combined with automated logging and predictive monitoring as described by MachineMetrics and OnLogic.

On the software side of automation, the same kinds of metrics used by test automation teams apply. Call Criteria and others suggest tracking defect detection rate, coverage, time to resolution, and automation rate. TestingXperts adds that the workflow automation market is projected to exceed tens of billions of dollars in the coming years, underlining that organizations are betting heavily on automation to keep up.

Case studies outside of pure manufacturing still tell a relevant story. TestingXperts describes a U.S. InsurTech firm that achieved roughly a 40% improvement in operational efficiency and a 99% reduction in manual effort by automating a complex validation process. Tupl reports dramatic reductions in manual labor for model setup and large jumps in detection accuracy in visual inspection. The numbers will look different in your plant, but the pattern is consistent: well-designed automation of tests and quality checks pays for itself in time saved, errors avoided, and confidence gained.

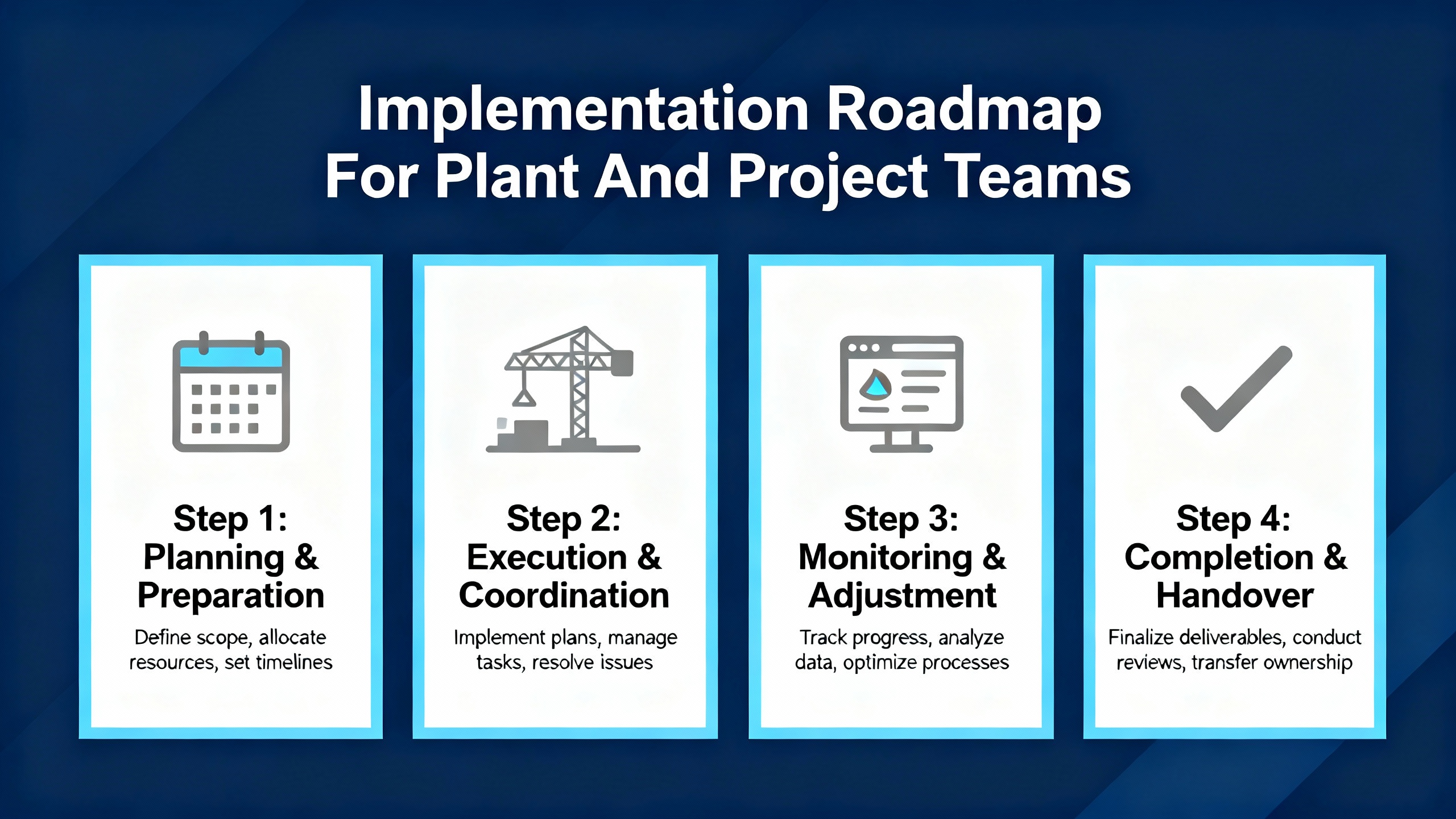

Implementation Roadmap For Plant And Project Teams

Bringing these standards to life is less about buying the latest AI camera and more about executing a disciplined roadmap. The collective experience in the Ministry of Testing community and multiple QA strategy guides offers practical themes that map well onto automation projects.

Start by understanding your current reality. Document how equipment is specified, built, and tested today. In many plants, the only true test is “we made it run on site,” and that is exactly where late defects are most expensive.

Engage the people closest to the risk. In software, forum practitioners advise testers to talk directly to users and stakeholders. In automation, that means operators, maintenance, process engineers, and safety professionals. Ask where equipment failures hurt the most, which behaviors matter most, and what failure modes are hardest to deal with.

Prioritize by business and safety risk, not by convenience. Risk-based testing, as recommended by Testlio and Qualitest, means you focus limited test and automation resources where failures are most costly or dangerous. For automation equipment, that typically means safety and high-value production flows first.

Establish clear ownership. Articles from QT and others stress that many test automation efforts fail because nobody owns them. In a plant, you need explicit responsibility for test benches, automated QC cells, and test assets, with time allocated to maintain them.

Start small and prove value. Multiple test automation guides recommend beginning with a small set of high-value workflows and building from there. For a systems integrator, that might mean automating a standard set of tests for your most common panel style before tackling custom machinery.

Integrate with your existing delivery processes. Continuous integration and continuous delivery are not just for web apps. In many controls teams, it is now feasible to run automated regression tests on PLC and HMI code every time changes are made, mirroring software practices described by Global App Testing and TestRail.

Communicate relentlessly. The Ministry of Testing community stresses that testers should be transparent about constraints and collaborate with developers rather than adopt an adversarial stance. The same applies to automation: designers, builders, testers, and operators should share a clear view of what is being tested, what is not, and why.

Above all, treat test automation and quality standards as engineering work, not as clerical overhead. Parasoft, SmartBear, and others repeatedly point out that robust test automation uses the same engineering disciplines as production code: good design, clean code, reviews, and ongoing investment.

FAQ

How much test automation do I really need around my automation equipment? There is no universal percentage. Research cited by TestGrid indicates that about a third of companies aim to automate between half and three-quarters of their testing, but the right level for you depends on your risk profile and resources. Focus first on high-risk and high-frequency scenarios, especially safety functions, critical production flows, and integrations that are costly to debug in the field.

When should I invest in AI-based visual or predictive quality systems? AI-driven inspection and predictive quality, as described by Tupl and OnLogic, make the most sense when manual inspection is a bottleneck, when accuracy is inconsistent, or when you need to inspect every part continuously. If you already struggle to maintain consistent visual checks on panels, assemblies, or mechanical components, and you have sufficient data volume, AI tools can significantly raise detection accuracy and reduce labor.

How do I keep quality standards from becoming paperwork that nobody follows? Tie every standard to a measurable outcome and a real pain point. MachineMetrics highlights KPIs like first pass yield and scrap rates; QA automation sources emphasize defect rates and time to resolution. If a procedure does not help improve one of those metrics, simplify or remove it. Regularly review your test suites and standards, pruning low-value tests, just as Parasoft and other testing experts recommend for software.

Closing

Well-tested automation equipment is not an accident; it is the result of clear standards, disciplined QA, and smart use of automation and data. When you combine process-oriented assurance with reliable, automated quality control on the floor, you stop relying on heroics at commissioning time and start delivering systems that simply run. That is what your operators want, what your customers pay for, and what a seasoned integration partner should help you build every time.

References

- https://www.cs.uic.edu/~i440/Grad%20Papers/440%20Fall%202024/Papers/CS440_GradPaper_NidhiRJois%20-%20Final.pdf

- https://www.globalapptesting.com/best-practices-for-qa-testing

- https://www.browserstack.com/guide/test-automation-standards-and-checklist

- https://callcriteria.com/quality-assurance-automation/

- https://www.machinemetrics.com/blog/quality-assurance

- https://www.projectmanager.com/blog/quality-assurance-and-testing

- https://www.qarmainspect.com/blog/quality-assurance-automation

- https://sgbi.us/10-strategies-to-enhance-reliability-of-automation-tests/

- https://www.testingxperts.com/blog/qa-automation-strategy

- https://tupl.com/automating-quality-assurance-in-smart-factories-with-ai/

Keep your system in play!

Related Products

Related articles Browse All

-

amikong NewsSchneider Electric HMIGTO5310: A Powerful Touchscreen Panel for Industrial Automation2025-08-11 16:24:25Overview of the Schneider Electric HMIGTO5310 The Schneider Electric HMIGTO5310 is a high-performance Magelis GTO touchscreen panel designed for industrial automation and infrastructure applications. With a 10.4" TFT LCD display and 640 x 480 VGA resolution, this HMI delivers crisp, clear visu...

amikong NewsSchneider Electric HMIGTO5310: A Powerful Touchscreen Panel for Industrial Automation2025-08-11 16:24:25Overview of the Schneider Electric HMIGTO5310 The Schneider Electric HMIGTO5310 is a high-performance Magelis GTO touchscreen panel designed for industrial automation and infrastructure applications. With a 10.4" TFT LCD display and 640 x 480 VGA resolution, this HMI delivers crisp, clear visu... -

BlogImplementing Vision Systems for Industrial Robots: Enhancing Precision and Automation2025-08-12 11:26:54Industrial robots gain powerful new abilities through vision systems. These systems give robots the sense of sight, so they can understand and react to what is around them. So, robots can perform complex tasks with greater accuracy and flexibility. Automation in manufacturing reaches a new level of ...

BlogImplementing Vision Systems for Industrial Robots: Enhancing Precision and Automation2025-08-12 11:26:54Industrial robots gain powerful new abilities through vision systems. These systems give robots the sense of sight, so they can understand and react to what is around them. So, robots can perform complex tasks with greater accuracy and flexibility. Automation in manufacturing reaches a new level of ... -

BlogOptimizing PM Schedules Data-Driven Approaches to Preventative Maintenance2025-08-21 18:08:33Moving away from fixed maintenance schedules is a significant operational shift. Companies now use data to guide their maintenance efforts. This change leads to greater efficiency and equipment reliability. The goal is to perform the right task at the right time, based on real information, not just ...

BlogOptimizing PM Schedules Data-Driven Approaches to Preventative Maintenance2025-08-21 18:08:33Moving away from fixed maintenance schedules is a significant operational shift. Companies now use data to guide their maintenance efforts. This change leads to greater efficiency and equipment reliability. The goal is to perform the right task at the right time, based on real information, not just ...

Need an automation or control part quickly?

- Q&A

- Policies How to order Part status information Shipping Method Return Policy Warranty Policy Payment Terms

- Asset Recovery

- We Buy Your Equipment. Industry Cases Amikong News Technical Resources

- ADDRESS

-

32D UNITS,GUOMAO BUILDING,NO 388 HUBIN SOUTH ROAD,SIMING DISTRICT,XIAMEN

32D UNITS,GUOMAO BUILDING,NO 388 HUBIN SOUTH ROAD,SIMING DISTRICT,XIAMEN

Copyright Notice © 2004-2026 amikong.com All rights reserved

Disclaimer: We are not an authorized distributor or distributor of the product manufacturer of this website, The product may have older date codes or be an older series than that available direct from the factory or authorized dealers. Because our company is not an authorized distributor of this product, the Original Manufacturer’s warranty does not apply.While many DCS PLC products will have firmware already installed, Our company makes no representation as to whether a DSC PLC product will or will not have firmware and, if it does have firmware, whether the firmware is the revision level that you need for your application. Our company also makes no representations as to your ability or right to download or otherwise obtain firmware for the product from our company, its distributors, or any other source. Our company also makes no representations as to your right to install any such firmware on the product. Our company will not obtain or supply firmware on your behalf. It is your obligation to comply with the terms of any End-User License Agreement or similar document related to obtaining or installing firmware.

Cookies

Individual privacy preferences

We use cookies and similar technologies on our website and process your personal data (e.g. IP address), for example, to personalize content and ads, to integrate media from third-party providers or to analyze traffic on our website. Data processing may also happen as a result of cookies being set. We share this data with third parties that we name in the privacy settings.

The data processing may take place with your consent or on the basis of a legitimate interest, which you can object to in the privacy settings. You have the right not to consent and to change or revoke your consent at a later time. This revocation takes effect immediately but does not affect data already processed. For more information on the use of your data, please visit our privacy policy.

Below you will find an overview of all services used by this website. You can view detailed information about each service and agree to them individually or exercise your right to object.

You are under 14 years old? Then you cannot consent to optional services. Ask your parents or legal guardians to agree to these services with you.

-

Google Tag Manager

-

Functional cookies

Leave Your Comment